Matrix Low Rank Approximation Multiplication

KAAT BBTk kAATk 2Small B2Rd and d 3Computationally easy to obtain from A. JzTAj2 zTAATz 10 zTCCTz zTAAT CCTz 11 2 k1 C kAA T CCTk 2 12 k1CC T kAAT CCTk 2 13 k1AA T 2kAAT CCTk 2 14 2 k1 A 2kAA T CCTk 2 15.

Matrix Factorization And Latent Semantic Indexing 1 Lecture 13 Matrix Factorization And Latent Semantic Indexing Web Search And Mining Ppt Download

Any matrixBof rankkcan be decomposed into a long and skinny matrix times short and long one.

Matrix low rank approximation multiplication. 2018 Fast raypath separation based on low-rank matrix approximation in a shallow-water waveguide. Since the product of matrices has a rank no more than the rank of any of the matrices that were multiplied it follows that Q QT has rank at most l in fact it has rank exactly l. That is the usual perspective is that the elements of the product matrix should be viewed as AB ik Xn j1 A ijB jk A iB j.

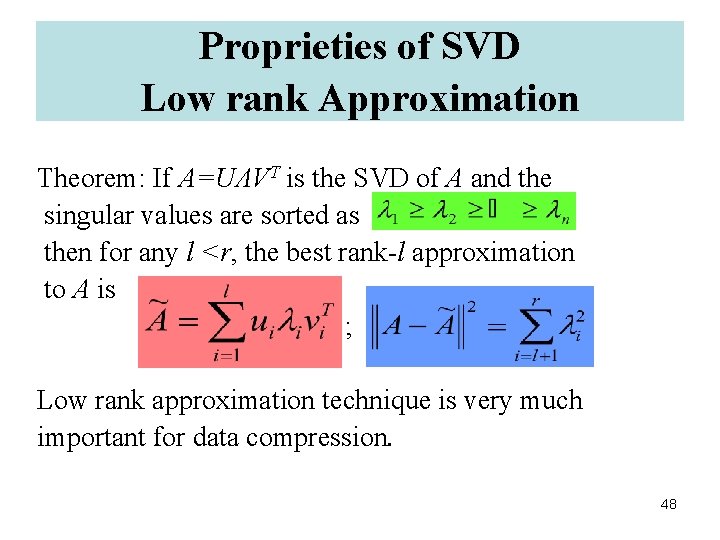

Instead of this one should think of matrix multiplication as returning a matrix that equals the sum of outer. A Theoreticians Design Pattern for Approximation and Streaming Algorithms. Remember that the rank of a matrix is the dimension of the linear space spanned by its columns.

If is full-rank then. Summary of results on -approximate matrix multiplication AB with respect to spectral norm nr and sr denote the nuclear and stable rank of a matrix respectively. As a result of investigating the influence on the recognition accuracy of the existing model it is possible to reduce up to about 90 of rank of data matrices while keeping recognition accuracy -2 of baseline.

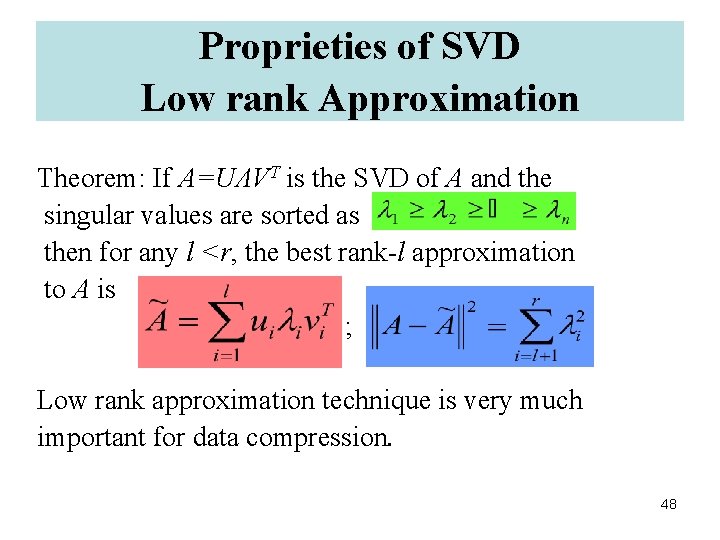

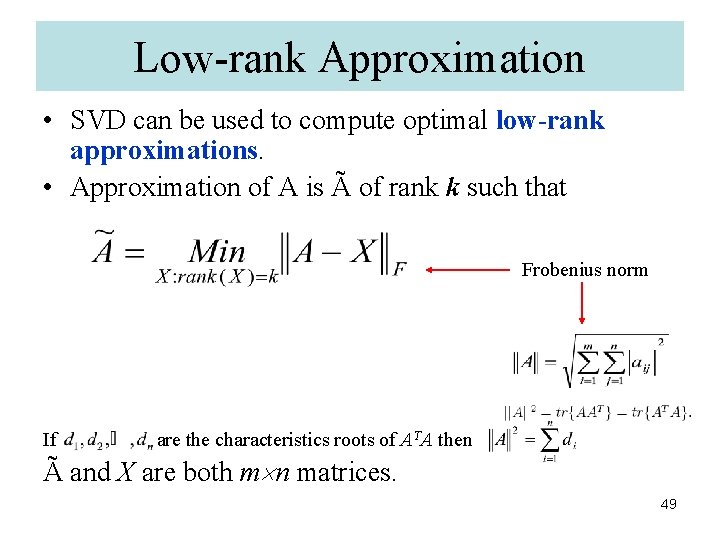

Equation 6 follows because for any matrix B kBk 2 1B 1BT kBTk 2 and equation 9 follows because yprojected onto H k is yand zprojected onto H k is 0. Multiplication by a full-rank square matrix preserves rank. In mathematics low-rank approximation is a minimization problem in which the cost function measures the fit between a given matrix the data and an approximating matrix the optimization variable subject to a constraint that the approximating matrix has reduced rank.

2 such that rankX is less than r a prescribed bound and kA Xkis small. The original matrixAis described byndnumbers while describingYkandZTrequires onlyknd numbers. In this work we focus on the case where Tx is a Toeplitz-like matrix encoding a convolution relationship of the form Txh P.

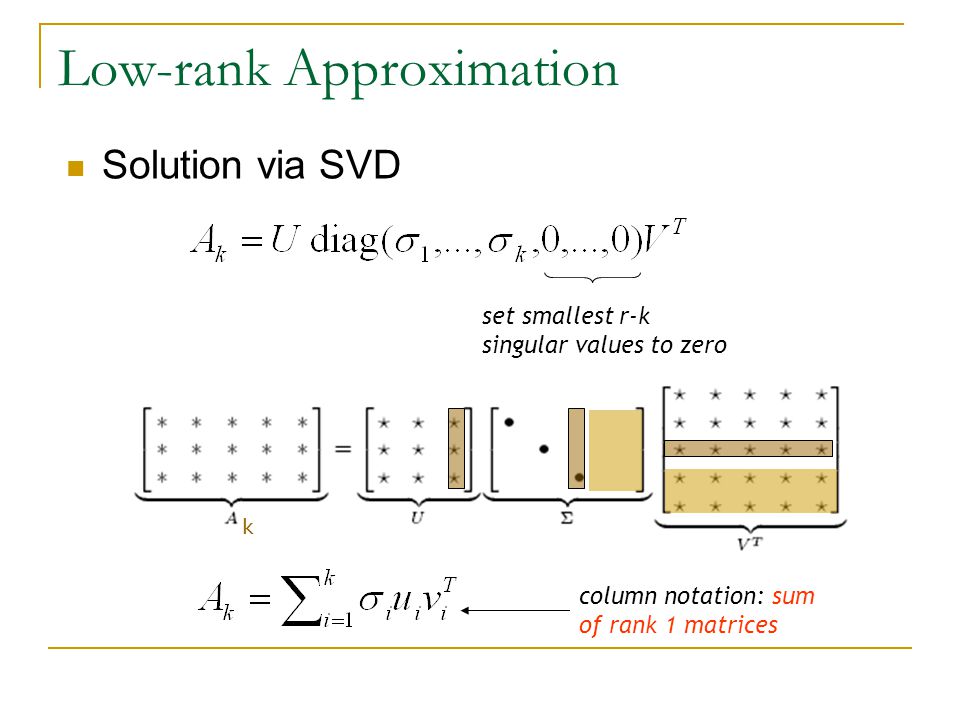

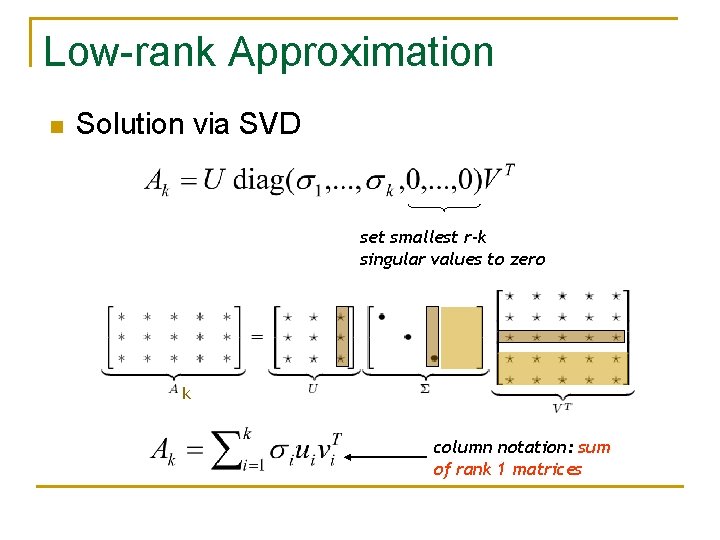

1 ε A A k for all k rank A If then. Assume Set A Rmn m. More specifically if an m n matrix is of rank r its compact SVD representation is the product of an m r matrix an r r diagonal matrix and an r n matrix.

This survey paper on the Low Rank Approximation LRA of matrices provides a broad overview of recent progress in the eld with perspectives from both the Theoretical Computer Science TCS and. Algorithms have been proposed for low-rank matrix factoriza-tions such as singular value and QR decomposition 11. Proposition Let be a matrix and a square matrix.

Motivated by the above formulation we propose a weighted low-rank approximation problem that generalizes the constrained low-rank approximation problem of Golub Ho man and Stewart. Another important fact is that the rank of a matrix does not change when we multiply it by a full-rank matrix. Low rank matrix Approximation Problem.

The problem is used for mathematical modeling and data compression. When kis small relative tonandd replacing the Figure 1. Another approach is based on random projections 13.

In many applications it can be useful to approximate with a low-rank matrix. The Journal of the Acoustical Society of America 143 4 EL271-EL277. Where each A iB j 2R is a number computed as the inner product of two vectors in Rn.

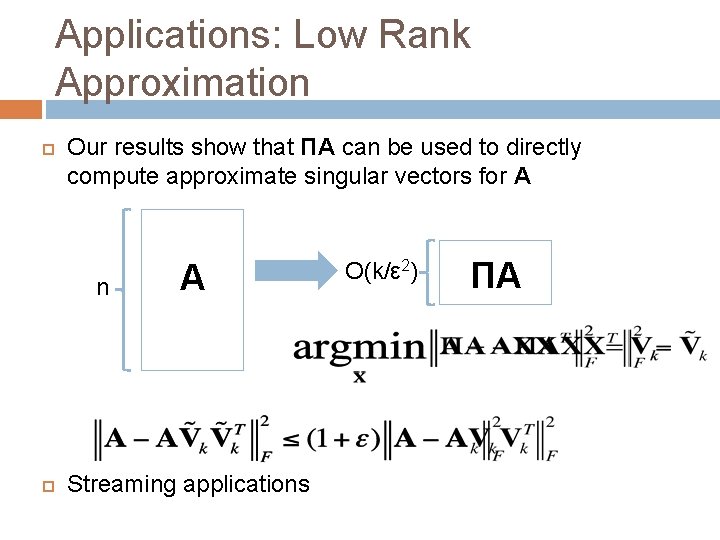

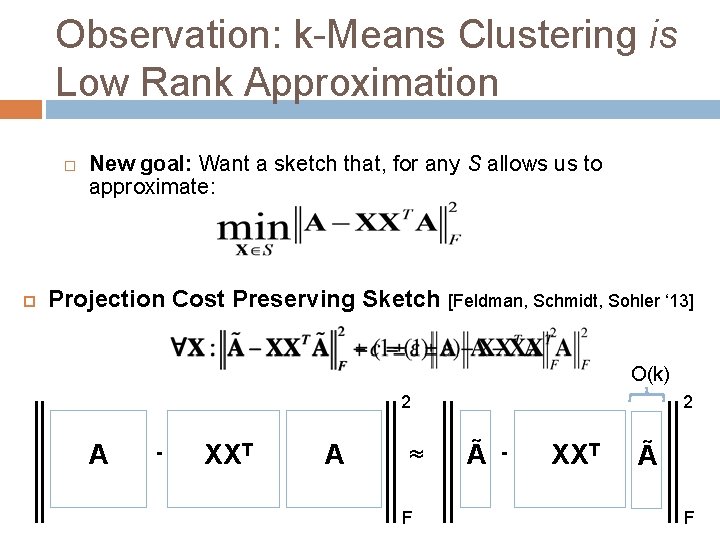

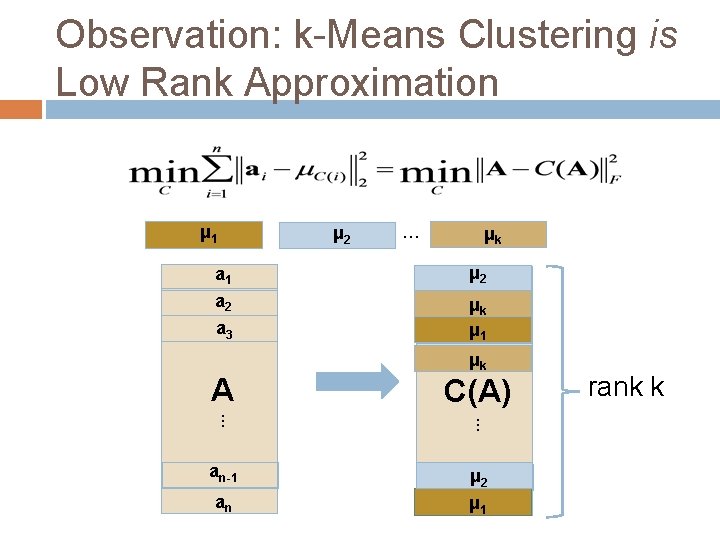

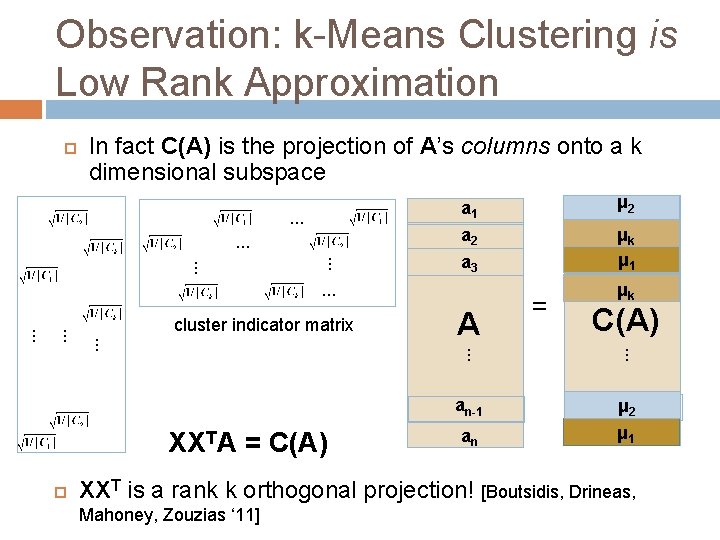

Many alternate approaches to approximate matrix multipli-cation exist. N t Orank A ε2A P Ak. Matrix Approximation Let PA k U kU T k be the best rank kprojection of the columns of A kA PA kAk 2 kA Ak 2 1 Let PB k be the best rank kprojection for B kA PB kAk 2 1 q 2kAAT BBTk FKV04 From this point on our goal is to nd Bwhich is.

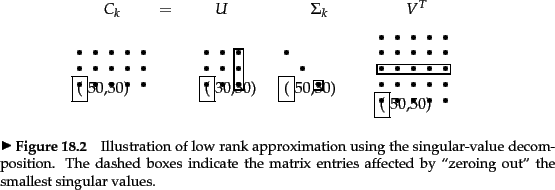

For example one such proposal leverages Fast Fourier Transforms FFTs and treats matrix multiplication as a low-rank polynomial multiplication 12. The primary advantage of using is to eliminate a lot of redundant columns of zeros in and thereby explicitly eliminating multiplication by columns that do not affect the low-rank approximation. This version of the SVD is sometimes known as the reduced SVD or truncated SVD and is a computationally simpler representation from which to compute the low rank approximation.

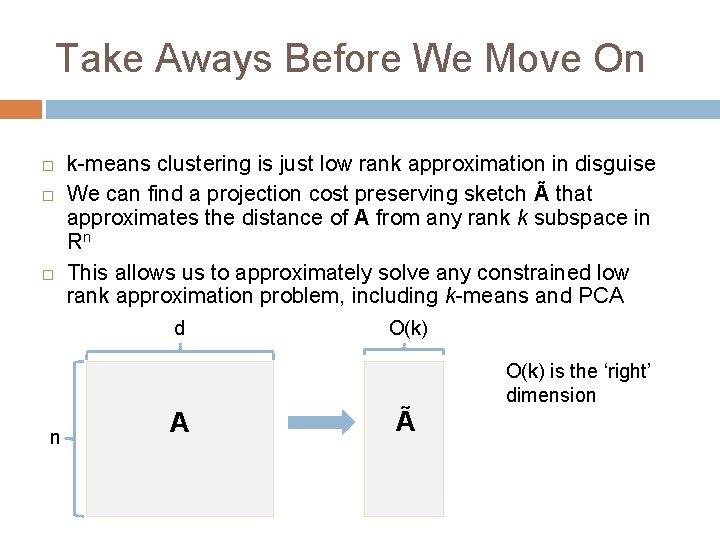

Given A find rank-k matrix B that approximates A in operator norm. 2018 Coresets-Methods and History. Low-rank approximations We consider a matrix with SVD given as in the SVD theorem.

A low-rank approximation provides a lossy compressed version of thematrix. Where the singular values are ordered in decreasing order. P x b.

Metric of dimensions Reference Rank OrA rB2 9 Stable rank OsrA srB4 8 Nuclear norm OnrA nrB2 Theorem 1. We study a general framework obtained by pointwise mul-tiplication with the weight matrix and consider the. If r is constant you can multiply this by a.

Nd a low rank matrix X A. Low Rank Approximation is a fundamental computation across a broad range of ap-plications where matrix dimension reduction is required. Proof via Matrix Multiplication Thm.

Min x rankTx st. 5 where x 2Cmrepresents a vectorized array of the k-space data to be recovered Tx is a structured lifting of x to a matrix in CM N and b are the known k-space samples. As Q QT is an m times m matrix with rank at most l it follows that if l m then Q QT neq I_m since I_m has rank m.

We derive a fast algorithm for the structured low-rank matrix recovery problem. In this research we propose a method to introduce the low-rank approximation method widely used in the field of scientific and technical computation into the convolution computation. You can also truncate the SVD of a higher-rank matrix to get a low-rank approximation.

Then we consider zTAwith z2H m k.

What Is The Meaning Of Low Rank Matrix Quora

Eigen Decomposition And Singular Value Decomposition Ppt Video Online Download

Pdf Matrices With Hierarchical Low Rank Structures

Dimensionality Reduction For Kmeans Clustering And Low Rank

Matrix Factorization And Latent Semantic Indexing 1 Lecture 13 Matrix Factorization And Latent Semantic Indexing Web Search And Mining Ppt Download

Matrix Decomposition And Its Application In Statistics Nishith

Dimensionality Reduction For Kmeans Clustering And Low Rank

Matrix Decomposition And Its Application In Statistics Nishith

Pdf Fast Monte Carlo Algorithms For Matrices Ii Computing A Low Rank Approximation To A Matrix Semantic Scholar

Http Cs Stanford Edu People Mmahoney Cs369m Lectures Lecture3 Pdf

The Low Rank Function Approximation Of A Bivariate Function Download Scientific Diagram

Dimensionality Reduction For Kmeans Clustering And Low Rank

Randomized Low Rank Approximation And Pca Beyond Sketching Cameron Musco Youtube

Butterflies Are All You Need A Universal Building Block For Structured Linear Maps Stanford Dawn

Eigen Decomposition And Singular Value Decomposition Based On

Https Www Sjsu Edu Faculty Guangliang Chen Math253s20 Lec7matrixnorm Pdf

Dimensionality Reduction For Kmeans Clustering And Low Rank

Dimensionality Reduction For Kmeans Clustering And Low Rank