Matrix Multiplication Kernel

A i1 a in. The first example uses sequential execution for all loops.

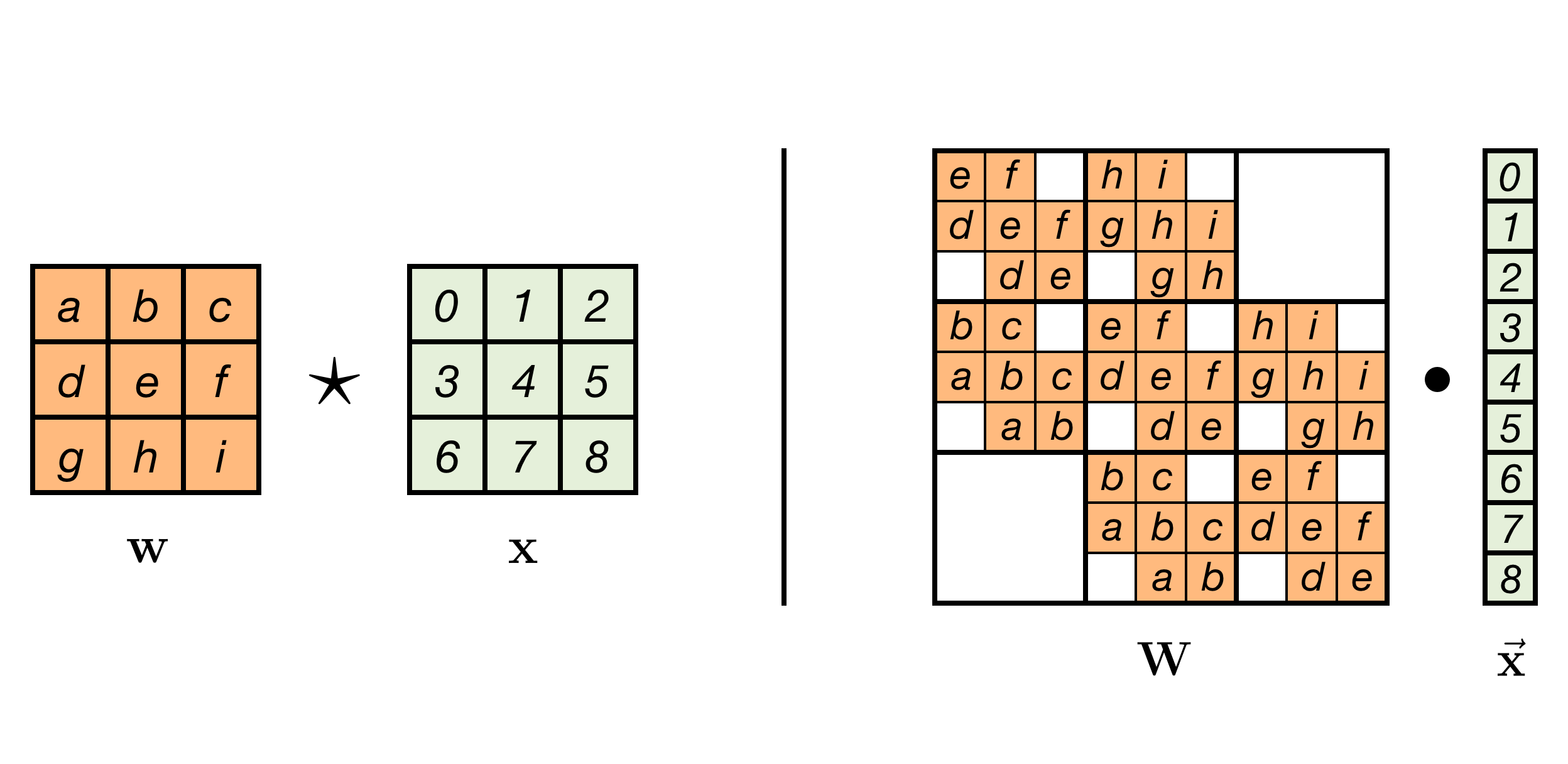

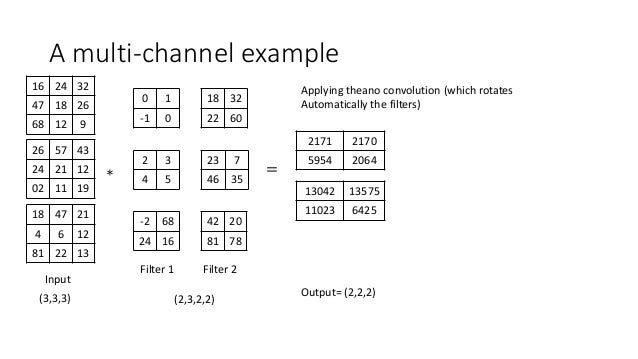

Convolution As Matrix Multiplication

In general matrix multiplication is defined for rectangular matrices.

Matrix multiplication kernel. SpMxV is a mathematical kernel that takes the form of. It ensures that extra threads do not do any work. Kernel methods are a highly effective and widely used collection of modern ma-chine learning algorithms.

B jn 1 C A 0 B B. A jk M matrix multiplied by a kl N matrix results in a jl P matrix. 1 C C A.

Heres the code for the kernel. The formula used to calculate elements of d_P is. Assume A is a p w matrix and B is a w q matrix So C will be p q matrix.

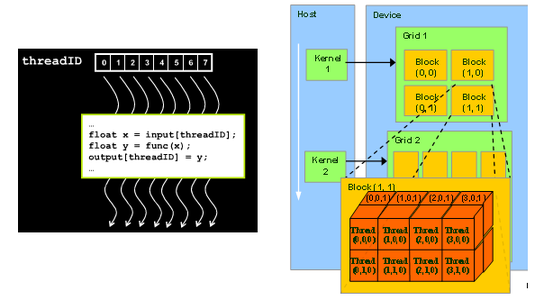

The performance of sparse-matrix algorithms tends to be much. Well start with a very simple kernel for performing a matrix multiplication in CUDA. Matrix multiplication between a IxJ matrix d_M and JxK matrix d_N produces a matrix d_P with dimensions IxK.

Matrix multiplication Matrix inverse Kernel and image Radboud University Nijmegen Matrix multiplication For A an m n matrix B an n p matrix. A fundamental limitation of virtually all such methods are computations involving the kernel matrix that naïvely scale quadratically eg constructing the kernel matrix and matrix-vector multiplication. I am trying to optimize a Matrix-vector multiplication kernel for an Intel CPU-GPU system.

0 B b j1. More generally SpMxV can be represented as. So here is an example for 22 kernel and 33 input.

0 B B. I am pretty sure this is hard to understand just from reading. Fortunately our kernel can be easily extended to a general matrix multiplication kernel.

I am aware of DSYR2K but that routine only helps when we are multiplying AA which would result in a symmetric matrix. Currently our kernel can only handle square matrices. Lets say we want to multiply matrix A with matrix B to compute matrix C.

The above condition is written in the kernel. Spring 2016 Matrix Calculations 10 43. Const int newID0 get_group_id1TRANSPOSEY tx.

Tuning a naive kernel. Using shared memory. Now we will have a look at how to use Kernel Tuner to find the best performing combination of.

Is there a functionsubroutine in MKL to specify that the product matrix multiplication of two different matrices would be symmetric so that matrix multiplication happens faster. Matrix multiplication is simple. The matrix multiplication kernel variations described in this section use execution policies to express the outer row and col loops as well as the inner dot product loop using the RAJA kernel interface.

The matrix multiplication kernel variations described in this section use execution policies to express the outer row and col loops as well as the inner dot product loop using the RAJA kernel interface. AB C is an m p matrix. __kernel void gemv const __global float4 M const __global float4 V uint width uint height __global float W __local float partialDotProduct Each work-group handles as many matrix.

You compute a multiplication of this sparse matrix with a vector and convert the resulting vector which will have a size n-m12 1 into a n-m1 square matrix. Output newID1Q newID0 buffer tx ty. I know that gemvBLAS-2 is memory bound but I want to obtain the best performance possible.

1 C C A c ij Xn k1 a ikb kj A. Introduction to the Intel Math Kernel LibraryIntroduction to the Intel Math Kernel Library Multiplying Matrices Using dgemmMultiplying Matrices Using dgemm Measuring Performance with Intel MKL Support FunctionsMeasuring Performance with Intel MKL Support Functions. With the boundary condition checks the tile matrix multiplication kernel is just one more step away from being a general matrix multiplication kernel.

They illustrate more complex policy examples and show additional RAJA kernel features. Y Ax 1 where A is an MN sparse matrix the majority of the elements are zero y is an M1 vector and x is an N1 vector. Matrix multiplication tutorial Naive CUDA kernel.

To learn to write a tiled matrix -multiplication kernel Loading and using tiles for matrix multiplication Barrier synchronization shared memory Resource Considerations Assume that Width is a multiple of tile size for simplicity. Store the transposed result coalesced if newID0 Q newID1 P. Now lets see what the new matrix-multiplication kernel looks like.

YAx 2 where α and β are scalars. Const int newID1 get_group_id0TRANSPOSEX ty.

Tiled Matrix Multiplication Kernel It Shared Memory To Reduce Download Scientific Diagram

Invertible Convolutions Emiel Hoogeboom

Is There An Function In Pytorch For Converting Convolutions To Fully Connected Networks Form Stack Overflow

Opencl Matrix Multiplication Sgemm Tutorial

2 D Convolution As A Matrix Matrix Multiplication Stack Overflow

Multiplication Kernel An Overview Sciencedirect Topics

A Sparse Matrix Multiplication Assembly Kernel The Compute Kernel Is Download Scientific Diagram

Multiplication Kernel An Overview Sciencedirect Topics

Running A Parallel Matrix Multiplication Program Using Cuda On Futuregrid

Do The Filters In Deconvolution Layer Same As Filters In Convolution Data Science Stack Exchange

Why Gemm Is At The Heart Of Deep Learning Pete Warden S Blog

Multiplication Kernel An Overview Sciencedirect Topics

An Example Of The Deconvolution Process Using Transpose Convolution In Download Scientific Diagram

Opencl Matrix Multiplication Sgemm Tutorial

2 D Convolution As A Matrix Matrix Multiplication Stack Overflow

In A Convolutional Neural Network Cnn When Convolving The Image Is The Operation Used The Dot Product Or The Sum Of Element Wise Multiplication Cross Validated

Convolution As Matrix Multiplication

Matrix Multiplication To Achieve Convolution Operation Programmer Sought

Performing Convolution By Matrix Multiplication F Is Set To 3 In This Download Scientific Diagram